November 13 2024

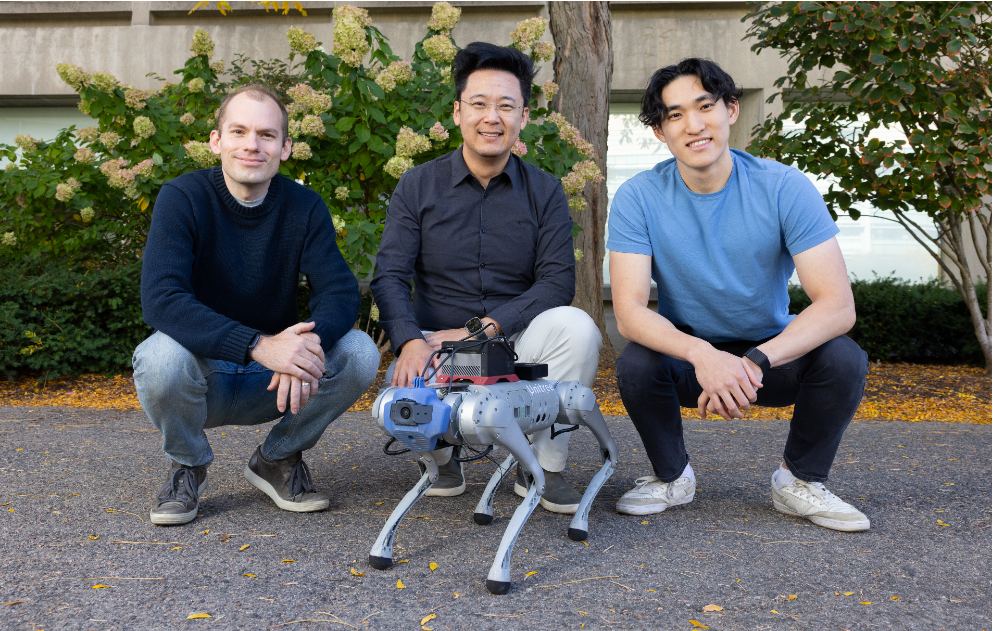

MIT CSAIL researchers have introduced LucidSim, a revolutionary system that uses generative AI and physics simulation to train robots in diverse and realistic virtual environments without relying on real-world data. LucidSim bridges the “sim-to-real gap” by generating structured descriptions of environments with large language models, converting them into images using generative models, and ensuring physical accuracy via simulation. It addresses key challenges in robotics, including skill transfer and data diversity.

Inspired by a casual discussion in Cambridge, LucidSim employs innovative techniques like Dreams In Motion (DIM) to create dynamic, realistic training videos. By synthesizing diverse prompts through ChatGPT and combining depth maps and semantic masks, the system produces data that is both realistic and varied. Robots trained with LucidSim outperformed traditional methods, achieving an 88% success rate, compared to 15% using expert demonstration data.

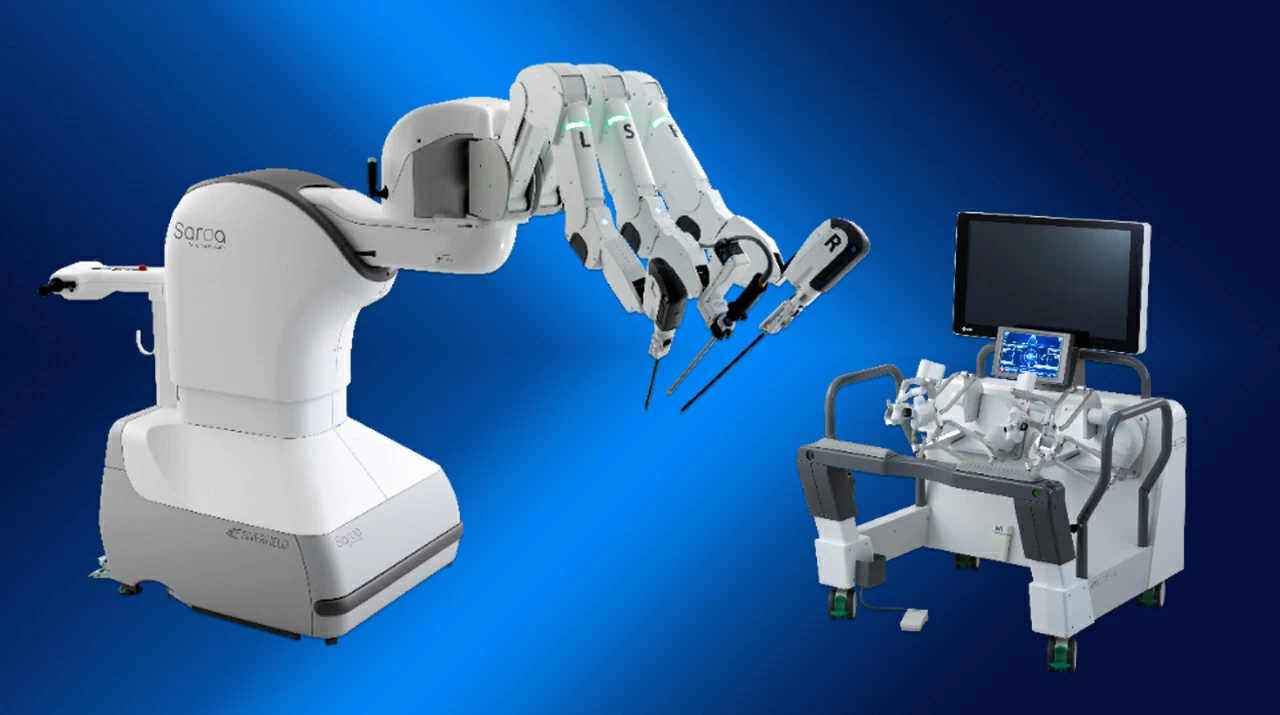

LucidSim’s potential extends beyond tasks like quadruped locomotion to areas such as mobile manipulation. The system offers scalability by eliminating the need for real-world scene setups, paving the way for intelligent machines to learn complex skills virtually. Researchers will present their findings at the Conference on Robot Learning, highlighting the promise of this groundbreaking framework to accelerate real-world robotic deployment.

https://www.csail.mit.edu/news/can-robots-learn-machine-dreams