The European Union’s Artificial Intelligence Act officially came into effect, establishing comprehensive regulations for AI systems across member states. The Act aims to ensure transparency, safety, and ethical standards in AI development and deployment.

The European Union’s Artificial Intelligence Act (AI Act), which entered into force on August 1, 2024, represents the world’s first comprehensive legal framework for artificial intelligence. This legislation aims to promote the development and adoption of safe and trustworthy AI systems across the EU, addressing potential risks to citizens’ health, safety, and fundamental rights.

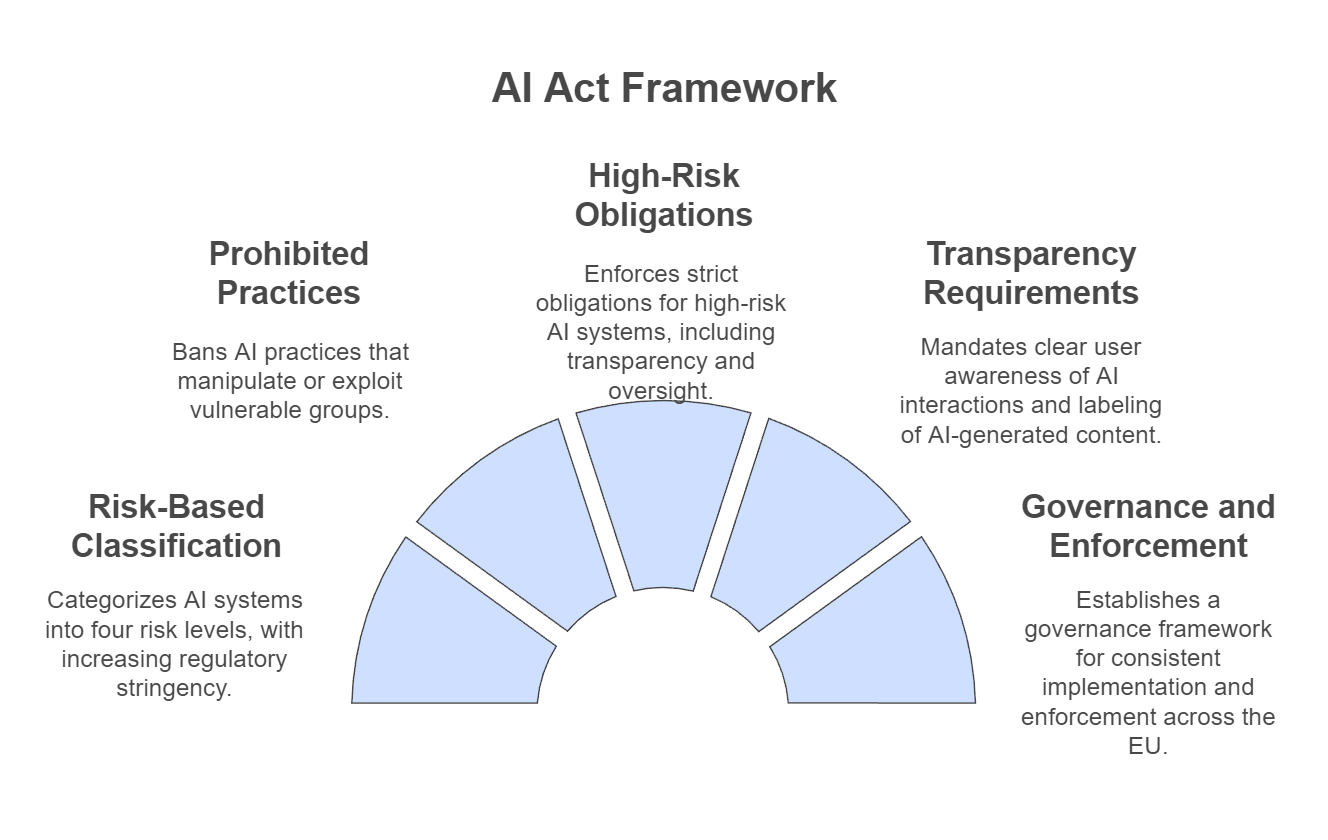

Key Features of the AI Act:

- Risk-Based Classification:

- The AI Act employs a risk-based approach, categorizing AI systems into four levels: minimal, limited, high, and unacceptable risk. The stringency of regulatory requirements increases with the potential risk associated with the AI system. Artificial Intelligence Act

- Prohibited AI Practices:

- Certain AI practices are outright banned under the AI Act, including:

- AI systems that deploy subliminal, manipulative, or deceptive techniques to distort behavior and impair informed decision-making, causing significant harm.

- Exploitation of vulnerabilities related to age, disability, or socio-economic circumstances to distort behavior, causing significant harm. Artificial Intelligence Act

- Certain AI practices are outright banned under the AI Act, including:

- High-Risk AI Systems:

- AI systems classified as high-risk are subject to strict obligations, such as:

- Rigorous risk assessments and mitigation measures.

- High standards of transparency, including clear information about the system’s capabilities and limitations.

- Ensuring human oversight to prevent or minimize risks. Artificial Intelligence Act

- AI systems classified as high-risk are subject to strict obligations, such as:

- Transparency Requirements:

- AI systems with specific transparency risks, such as chatbots, must clearly inform users that they are interacting with a machine. Additionally, certain AI-generated content must be labeled as such to ensure transparency. European Commission

- Governance and Enforcement:

- The AI Act establishes a robust governance framework, including:

- The creation of the European Artificial Intelligence Board to facilitate consistent implementation across the EU.

- Designation of national supervisory authorities responsible for enforcing the regulations within their respective member states. ArXiv

- The AI Act establishes a robust governance framework, including:

Implications for Businesses and AI Developers:

- Compliance Obligations: Organizations operating within the EU or providing AI systems to the EU market must ensure their AI technologies comply with the AI Act’s requirements, particularly if their systems are classified as high-risk.

- Innovation and Competitiveness: While the AI Act aims to foster innovation by providing clear guidelines, businesses must balance compliance with maintaining competitiveness, especially in rapidly evolving AI sectors.

- Global Influence: The AI Act is expected to set a global standard for AI regulation, influencing international norms and potentially impacting non-EU companies that offer AI products and services within the EU.

In summary, the EU’s AI Act establishes a pioneering regulatory framework designed to ensure the ethical and safe deployment of AI technologies, balancing innovation with the protection of fundamental rights and societal values.