Technological Advancements

Scalable 3D Connection Structure

Fujitsu and RIKEN’s 256-qubit superconducting quantum processor introduces a scalable three-dimensional (3D) connection structure to efficiently integrate more qubits without a complete redesign of the chip architecture. In practice, the design arranges repeating 4-qubit unit cells in a 3D configuration, leveraging the same unit cell layout used in the earlier 64-qubit systemfujitsu.com. This modular approach demonstrates that the architecture can scale from 64 to 256 qubits simply by tiling additional unit-cell modules in three dimensionsfujitsu.com. The 3D interconnect scheme is enabled by advanced packaging techniques — for example, using superconducting through-silicon vias (TSVs) and bump bonds — which stack or link multiple qubit chips or layers. By implementing a 3D contact structure, the team effectively created a larger processor from smaller units, showing a clear path to higher qubit countsfujitsu.com. This innovation is critical, as it means future scaling (to 1,000 qubits and beyond) can be achieved without fundamentally changing the qubit design, simplifying development and preserving compatibility with existing control schemesquantumcomputingreport.com.

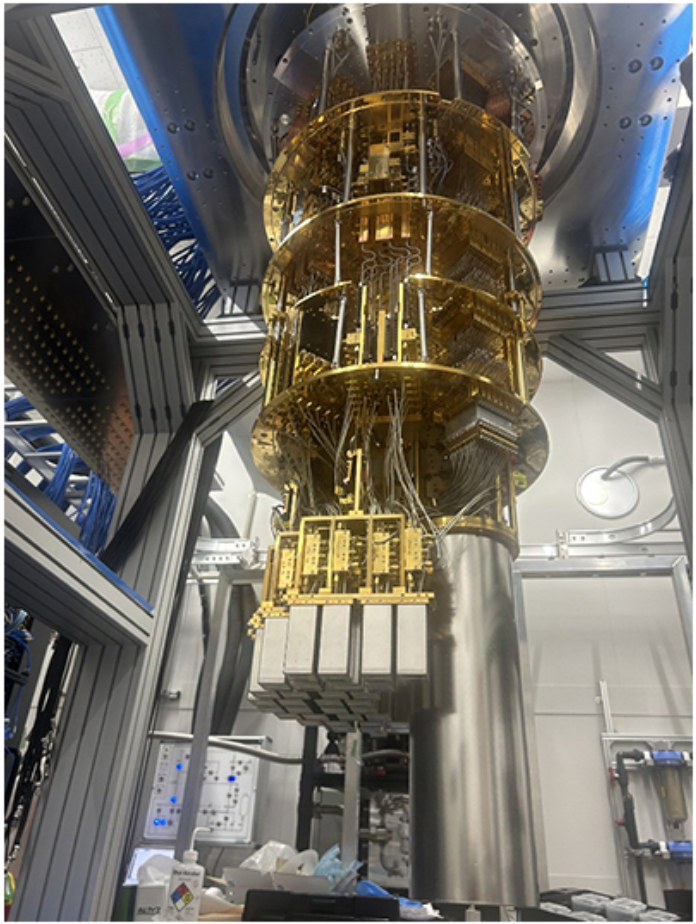

High-Density Integration in the Dilution Refrigerator

To accommodate four times as many qubits, the engineers achieved a quadrupled implementation density of components within the dilution refrigerator that houses the quantum processorfujitsu.com. All additional wiring, control electronics, and filtering hardware needed for the 256 qubits were densely integrated such that the entire 256-qubit setup fits into the same cryogenic unit previously used for the 64-qubit systemfujitsu.com. This high-density integration required innovative routing of signals and compact packaging of the qubit chips and interconnects. A key challenge was ensuring that increasing the component count by 4× did not overwhelm the refrigerator’s cooling power or physical space. Fujitsu and RIKEN addressed this by redesigning the internal layout and signal delivery (for example, using high-density flexible cabling and multiplexing techniques) to minimize heat load and space usagedocs.quantum.ibm.comquantumcomputingreport.com. The result is a 256-qubit system that operates within the same cryostat as its 64-qubit predecessor, proving that substantial scaling is possible without a proportionate expansion of the cryogenic infrastructurequantumcomputingreport.com. Achieving this level of integration is an important step toward larger quantum computers, as it indicates that packing more qubits into a given volume is feasible through clever engineering rather than just using a bigger refrigerator.

Thermal Design Optimizations

Scaling up to 256 qubits in a single cryogenic unit required meticulous thermal design optimizations. The dilution refrigerator must maintain temperatures on the order of 10–20 millikelvins for the qubits to remain superconducting and coherent. Adding many more qubits and control lines increases heat dissipation (from electrical currents, amplifiers, etc.) and can disturb this delicate low-temperature environment. Fujitsu and RIKEN implemented a “cutting-edge thermal design” that carefully balances the heat generated by the control electronics against the cooling power of the refrigeratorfujitsu.com. In practice, this meant improving heat sinking, isolating components, and optimizing the placement of cables and filters to reduce thermal load. The design maintains the necessary ultra-high vacuum and ultra-low temperature conditions despite the higher component densityfujitsu.com. By keeping the qubits at ~20 mK even with four times more components, the system preserves qubit coherence and performance. This thermal optimization was a critical enabler for the 256-qubit machine – it overcame a key scaling challenge of removing excess heat, ensuring that the larger system can operate as stably as the smaller 64-qubit systemfujitsu.com. The successful cooling of a much denser system provides confidence that the engineering approach can be extended to even larger quantum processors.

Practical Applications

With 256 qubits at hand, Fujitsu and RIKEN’s new quantum computer opens the door to more ambitious real-world applications in science and technology. Notably, the system is expected to advance molecular analysis in chemistry and materials science. Quantum computers can simulate molecular structures and interactions at the quantum level, a task that becomes exponentially complex for classical computers as molecules grow in size. The expanded 256-qubit capacity means researchers can attempt to model larger and more complex molecules than was possible with the previous 64-qubit machineriken.jp. For instance, more qubits allow encoding of more electronic orbitals or higher precision in quantum simulations, enabling studies of complex chemical reactions, drug molecules, or new materials. This capability could improve our understanding of pharmaceuticals, catalysts, or high-temperature superconductors by providing computational insights at the quantum mechanical level.

Another critical application area is quantum error correction (QEC) research. Quantum error correction is essential for scaling quantum computers to useful sizes because it counteracts the noise and decoherence that quantum states suffer. However, QEC schemes require many physical qubits to encode a single logical (error-protected) qubit. A 256-qubit device provides a testbed for implementing and experimenting with sophisticated error correction algorithms that were not feasible on smaller systemsriken.jp. Researchers can use subsets of the qubits to form logical qubits with error-correcting codes (for example, small surface codes or concatenated codes) and study their performance. The new machine could, for example, support a prototype logical qubit encoded with dozens of physical qubits, leaving additional qubits available to measure error syndromes and apply corrections. Demonstrating even a basic error-corrected logical qubit or running QEC routines on the 256-qubit system would be a major step forward, as it provides practical experience towards building fault-tolerant quantum computers. In summary, the increased qubit count directly translates to tackling more complex simulations in chemistry and materials, and it enables meaningful progress in quantum error correction – two areas seen as milestones on the path to practical quantum computingquantumcomputingreport.com.

It’s worth noting that Fujitsu plans to deploy this 256-qubit processor as part of a hybrid quantum computing platform, interfacing with classical high-performance computersquantumcomputingreport.com. In practical terms, this means the quantum machine can work in concert with classical HPC resources to solve problems: for example, a classical system could handle parts of a molecular simulation while offloading the quantum-mechanical portions to the 256-qubit device. This hybrid approach is expected to accelerate practical R&D in fields like drug discovery, finance, and materials science by leveraging the strengths of both quantum and classical computation.

Comparative Evaluation

Architecture: Gate-Based vs. Annealing

The Fujitsu–RIKEN 256-qubit system and IBM’s 156-qubit Heron R2 processor are both gate-based superconducting quantum computers, whereas D-Wave’s Advantage2 (with 4,400+ qubits) is a quantum annealer. Fujitsu and IBM use qubits (likely transmon qubits in superconducting circuits) that can be entangled and manipulated with logic gates to perform universal quantum algorithms. Fujitsu’s design employs the 3D unit-cell architecture discussed above, and IBM’s Heron uses IBM’s established heavy-hexagonal lattice coupling topology for its qubitsdocs.quantum.ibm.com. The heavy-hex lattice limits each qubit’s connections to reduce crosstalk, enabling improved gate fidelities. Heron R2 (introduced in 2024) incorporates 156 qubits in a heavy-hex layout, using innovations like high-density flexible cabling for signal delivery and introducing mitigation for two-level system (TLS) defects to boost coherence timesdocs.quantum.ibm.com. Fujitsu’s 256-qubit chip likely uses a more regular 2D lattice of qubits (grouped in 4-qubit modules) with 3D interconnects between modulesquantumcomputingreport.com, emphasizing scalable packaging. Both Fujitsu and IBM architectures are geared toward eventually implementing error-corrected, general-purpose quantum computing.

In contrast, D-Wave’s Advantage2 is built on a fundamentally different paradigm (quantum annealing). Its 4,400+ superconducting qubits are used in an analog manner: they are all connected in a fixed network (a new 20-way connectivity graph in the Advantage2 system) and evolve collectively to solve optimization problemsquantumcomputingreport.com. Rather than executing gate sequences, D-Wave’s qubits start in a superposition and slowly evolve (anneal) under a tunable Hamiltonian to find low-energy solutions to a problem encoded in their couplings. The Advantage2 uses a new topology (often referred to as Zephyr), which increases each qubit’s connectivity (each qubit can interact with up to 20 others) compared to the previous Pegasus topology (~15 connections). This higher connectivity and qubit count improve the ability to embed larger or more complex optimization problems. However, D-Wave’s qubits are not individually addressable by arbitrary gates, and the device is not universal in the same way IBM’s and Fujitsu’s are – it’s specialized for heuristic solving of specific mathematical problems (like QUBO/Ising models). In summary, Fujitsu/RIKEN and IBM have gate-model machines geared toward general algorithms and future fault-tolerance, while D-Wave’s system is a specialized annealer designed for optimization tasks.

Scalability and Qubit Count

When comparing qubit counts, D-Wave’s Advantage2 far exceeds the others in sheer number of qubits (thousands versus hundreds), but these numbers cannot be equated one-to-one across different architectures. Gate-based qubits (like those in the Fujitsu and IBM machines) are harder to scale in number due to strict requirements on coherence and control; each additional qubit requires high-precision control lines and introduces potential cross-talk and error sources. IBM’s largest gate-based chip to date (as of 2025) is the 433-qubit Osprey processor, and they have plans for a 1,121-qubit chip (Condor) and beyond. IBM’s Heron family, while featuring fewer qubits (133 in R1, 156 in R2), is designed as a smaller building block focusing on reliability and modularitydocs.quantum.ibm.com – possibly foreseeing multi-chip scaling in the future. Fujitsu’s 256-qubit system demonstrates an ability to pack a relatively large number of qubits by tiling smaller units in 3D, indicating a modular scaling approach that could be extended furtherquantumcomputingreport.com. Fujitsu and RIKEN explicitly proved that a 4× increase (64 to 256) is achievable in one leap, and their roadmap aims for 1,000 qubits by effectively repeating this scaling strategy (256→1024 qubits)fujitsu.comfujitsu.com.

Quantum annealers, like D-Wave’s, inherently scale to thousands of qubits because each qubit is relatively simple and the system performs one global operation (the anneal) rather than many sequential gate operations. D-Wave has steadily increased qubit counts from 128 (in early models) to >5,000 in the current Advantage systems, and the Advantage2 prototype has 4,400+ functional qubits with a design target of around 7,000 qubits in the full versionquantumcomputingreport.comquantumcomputingreport.com. This highlights scalability in terms of qubit count, but it comes with diminishing returns unless connectivity and coherence improve (which Advantage2 addresses with the 20-way coupling and doubled coherence time). It’s important to note that while adding qubits in an annealer increases the size of problems it can handle, scaling gate-based machines adds both opportunity (more complex algorithms, larger data encodings) and complexity (need for error correction, more control electronics). In short, Fujitsu/RIKEN and IBM focus on a roadmap of gradual qubit scaling with an eye on maintaining coherence and low error rates, whereas D-Wave emphasizes raw qubit count and connectivity to tackle larger optimization problems.

Performance and Error Rates

Direct performance comparisons between these systems are tricky due to their different natures. Gate-based quantum computers (IBM’s and Fujitsu’s) are often evaluated by metrics like quantum volume, circuit depth they can achieve, or error rates on benchmark algorithms. For instance, IBM has reported high fidelities for single- and two-qubit gates on Heron R2, thanks to improvements like TLS defect mitigation and refined fabrication, which contribute to more stable operationdocs.quantum.ibm.com. The Fujitsu–RIKEN team has not publicly released detailed error rates yet, but the expectation is that the 256-qubit system continues the high coherence and gate fidelity demonstrated in the 64-qubit prototype. Both systems will still have error rates per gate (typically on the order of 0.1–1% for two-qubit gates in state-of-the-art devices), which limit the size of circuits that can be run reliably. Performance for these gate-based machines is also a function of their control electronics and software stack – for example, how well they can calibrate so many qubits and how fast they can run gates and readouts. IBM’s Heron R2 benefits from fast signal delivery (via the flex cables) and presumably improved cryo-control architectures, which support running more complex circuits with reasonable throughputdocs.quantum.ibm.com.

D-Wave’s performance is measured very differently. As an annealer, it doesn’t run gate-by-gate algorithms but rather solves problems by finding low-energy states. Performance is often reported in terms of solution quality and time-to-solution for specific classes of problems (like optimization benchmarks) compared to classical algorithms. The Advantage2, with its improvements, has shown significant speed-ups on certain problems relative to both its predecessor and classical methods. D-Wave reported that the new 4,400-qubit prototype can solve some complex optimization instances (e.g. materials science or scheduling problems) up to 25,000× faster than the previous generation in internal testsquantumcomputingreport.com. It also delivers more consistent, higher-precision results due to increased qubit coherence and energy scalequantumcomputingreport.com. However, these speed-ups are not general – they apply to the narrow set of problems suited to quantum annealing. Moreover, annealers don’t currently use error-correction, so while they can employ thousands of qubits, each qubit is relatively noisy (though the noise is partly mitigated by the physics of annealing). In contrast, IBM and Fujitsu’s gate-based machines, with their lower per-operation error rates, can in principle eventually be scaled with error correction to solve a wide range of problems exactly, not just approximately. In summary, IBM and Fujitsu emphasize high-fidelity operations and reproducibility (crucial for algorithmic performance and eventual error-corrected computation), whereas D-Wave emphasizes raw speed on certain tasks and analog tolerance for errors.

Intended Use Cases

The three systems also diverge in their intended applications. Fujitsu–RIKEN’s 256-qubit machine and IBM’s Heron R2 are general-purpose quantum processors. They are designed to execute a variety of quantum algorithms – from simulating chemistry and materials, to solving linear algebra problems, optimization via QAOA, and even running small instances of Shor’s or Grover’s algorithms. Fujitsu specifically highlighted applications in quantum chemistry (molecular analysis) and as a testbed for quantum error correction research, aligning with areas that benefit from more qubitsriken.jp. IBM’s systems are typically used through the IBM Quantum cloud for a broad range of research and exploratory applications by industry and academia – for example, exploring machine learning with quantum kernels, optimization problems via variational algorithms, and foundational quantum algorithm development. In the near term, these gate-based machines will be used in a hybrid computing context, often solving parts of problems that are quantum-friendly while classical computers handle the rest. Both Fujitsu and IBM ultimately aim to use these systems as stepping stones toward larger, fault-tolerant quantum computers capable of tackling problems like drug discovery, complex optimization, cryptography (breaking certain cryptosystems or developing new ones), and large-scale scientific simulations.

D-Wave’s Advantage2, on the other hand, is purpose-built for combinatorial optimization and sampling problems. Its primary use cases are in industries and domains where problems can be formulated as minimizing an objective function, such as route planning, scheduling, portfolio optimization, constraint satisfaction, and certain machine learning tasks (like clustering or feature selection) that leverage Ising or QUBO formulations. D-Wave’s thousands of qubits allow it to represent and solve relatively large instances of these problems (potentially with tens of thousands of variables after minor embedding overhead). Many of D-Wave’s customers have used their quantum annealers for tasks like optimizing factory workflows, traffic flow, financial risk optimizations, or even protein folding approximations. With Advantage2’s increased connectivity and coherence, it can embed more complex problem graphs natively, reducing the need for extra qubits to map logical variables onto the hardware graph. However, D-Wave’s system is not intended for running arbitrary quantum algorithms – you would not use it for Shor’s algorithm or simulating quantum dynamics, for example. In contrast, Fujitsu’s and IBM’s gate-based systems are much more flexible, able to run any quantum circuit that fits in their qubit and depth limit. Those systems are well-suited for research in quantum algorithms and can in principle tackle a broader array of problems (albeit at a smaller scale currently).

In summary, Fujitsu/RIKEN’s 256-qubit and IBM’s 156-qubit processors represent the cutting edge of gate-based quantum computing aimed at universal applications (with current emphasis on chemistry, materials, and algorithm development), while D-Wave’s 4,400-qubit Advantage2 represents the forefront of quantum annealing, targeting specialized optimization tasks at an unprecedented scale. Each has its niche: gate-based machines strive for long-term impact via programmability and accuracy, and annealers provide near-term solutions for certain optimization problems via sheer qubit count and specialized design.

Roadmap to 1,000 Qubits

Fujitsu and RIKEN have outlined a clear roadmap to reach 1,000 qubits by 2026, building on the scalable design principles proven with the 256-qubit system. According to official statements, the first 1,000-qubit superconducting quantum computer is scheduled for installation in 2026 at a new facility in Fujitsu’s Technology Parkfujitsu.com. Achieving roughly four times the qubit count of the current machine in just one year will likely leverage the same 3D unit-cell tiling approach – for example, integrating four of the 256-qubit modules (or otherwise enlarging the module count) to reach ~1024 qubits. In fact, Fujitsu’s 3D connection architecture is inherently modular, suggesting that a 1024-qubit processor could be built by connecting four 256-qubit units, just as the 256 was built from four 64-qubit blocksfujitsu.com. This modular scaling will be combined with further innovations in cryogenic engineering and control electronics to handle the increased complexity. Fujitsu has already begun constructing the facility for the 1000-qubit system and is on track to make it available to users in fiscal year 2026fujitsu.com.

Beyond simply increasing qubit count, key technical challenges remain on the road to 1,000+ qubits. One major challenge is maintaining high coherence and low error rates as the system grows. More qubits and more interconnects can introduce noise, so improvements in qubit quality (materials, fabrication) and perhaps incorporating some form of quantum error mitigation or partial error correction will be important. Fujitsu and RIKEN have indicated that their second phase of R&D (extended through 2029) will focus on “developing key technologies to realize quantum computing with 1000 qubits”fujitsu.com. This likely includes advances in cryogenic I/O and control – for example, using cryogenic multiplexing to reduce the number of cables, or developing new microwave control hardware that can drive many qubits efficiently at low temperature. They may also explore improved packaging (even more layers or optimized 3D layouts) and circuit design refinements to keep the qubit uniformity high across a larger chip. Heat management will scale in difficulty as well, so the thermal design must further evolve to dissipate the heat from 1000 qubits’ control lines. The fact that the 256-qubit system operates in the same fridge as the 64-qubit gives hope that ingenious engineering can continue to stretch the capacity of current dilution refrigerators with only incremental upgradesfujitsu.com, but a 1024-qubit system will push this to the limit.

Another aspect of the roadmap is the integration of the quantum system into practical use. Fujitsu plans to offer the 1000-qubit machine as part of its computing services, targeting global companies and research institutions for joint research by 2026fujitsu.com. We can expect intermediate milestones on the way to 1000 qubits: for example, a 512-qubit interim test, or demonstrations of key subsystems (like a multi-chip module with two 256-qubit chips working in unison) before the full 1000-qubit launch. By 2026, Fujitsu and RIKEN aim not only to have the hardware in place but also to have developed the software stack and hybrid algorithms that can leverage such a machine effectively. Looking further, their collaboration extension to 2029 hints at work beyond 1,000 qubits, potentially in the realm of multiple thousands of qubitsfujitsu.com. Achieving that will likely involve moving toward fault-tolerant architectures – incorporating quantum error correction so that logical qubit counts can grow even as physical qubit numbers explode. In summary, the roadmap to 1,000 qubits centers on scaling up via modular 3D integration, advancing control electronics and cryogenics, and laying groundwork for error correction, with a targeted milestone of an operational 1000-qubit quantum computer in 2026fujitsu.com. This ambitious plan, if successful, would position Fujitsu and RIKEN at the forefront of superconducting quantum computing, bridging the gap between today’s prototypes and tomorrow’s fully scalable quantum machines.

Sources:

- Fujitsu & RIKEN Press Release – “Fujitsu and RIKEN develop world-leading 256-qubit superconducting quantum computer”, Fujitsu Global News (April 22, 2025)fujitsu.comfujitsu.com.

- RIKEN News – “RIKEN and Fujitsu unveil world-leading 256-qubit quantum computer” (April 22, 2025)riken.jp.

- IBM Quantum Documentation – “Processor Types – Heron R2 (156-qubit heavy-hex lattice)”, IBM Quantum (2024)docs.quantum.ibm.comdocs.quantum.ibm.com.

- Quantum Computing Report – “Fujitsu and RIKEN Develop 256-Qubit Superconducting Quantum Computer with Scalable 3D Architecture” (April 2025)quantumcomputingreport.comquantumcomputingreport.com.

- Quantum Computing Report – “D-Wave Completes Calibration of New 4,400+ Qubit Advantage2 Processor” (Nov 2024)quantumcomputingreport.comquantumcomputingreport.com.